AI on Segregation: Artificial Intelligence Addresses Social Inequality

Artificial intelligence (AI) is increasingly shaping critical domains such as employment, education, healthcare, criminal justice, credit and housing. These systems which analyze vast amounts of sensitive personal data hold immense power to either perpetuate historical discrimination or dismantle patterns of segregation and bias.

What is AI on Segregation?

At its core AI on segregation refers to the use of artificial intelligence technologies to detect, analyze and address patterns of social segregation and inequity found in data, algorithms and institutional decision making. Segregation here implies structural inequities in access and outcomes often aligned with race, gender, socioeconomic status or geography which AI systems can either replicate or help correct.

While AI holds promise for promoting fairness and inclusion these outcomes depend on the design, training data and governance of the models. For instance models trained on biased historical data can embed and amplify discriminatory patterns reproducing segregation digitally and reinforcing systemic inequality. Conversely ethically designed AI with strong auditing mechanisms can uncover hidden biases, flag unfair practices and recommend corrections to promote equity.

Effective AI on segregation therefore requires:

- High-quality, representative and inclusive datasets capturing diverse populations.

- Transparent models and explainable AI to understand how decisions are made.

- Regular audits and bias mitigation efforts aligned with evolving ethical frameworks.

- Institutional commitments such as regulatory compliance (e.g. New York City’s Local Law 144).

The stakes are high because AI-driven decisions increasingly influence individuals’ opportunities and freedoms. Examples include credit lending, hiring, education access and criminal justice risk assessments.

For an in-depth primer on algorithmic bias and its societal impact see Stanford’s Human-Centered AI (HAI) Algorithmic Bias Primer.

Key Areas AI Impacts Social Segregation:

AI’s influence pervades many structural sectors where segregation either exists or is emerging through automated decision making:

- Education Access: AI-powered adaptive learning systems can personalize education but unequal access to devices and broadband can exacerbate existing achievement gaps disproportionately impacting low income and minority students.

- Criminal Justice Bias: Risk assessment tools like COMPAS have shown racial disparities; Black defendants were nearly twice as likely to be misclassified as high risk compared to white defendants fueling concerns about fairness and systemic racism in AI-assisted sentencing.

- Employment Algorithms: Automated resume screening and candidate ranking models may unintentionally discriminate against applicants from women’s colleges or minority-serving institutions unless carefully audited and adjusted.

- Healthcare Equity: Many health algorithms historically prioritized care for healthier white patients over sicker Black patients due to biased training data. This leads to unequal access to timely diagnoses and treatments affecting millions annually.

- Housing & Credit Decisions: Proprietary AI models used by financial institutions have historically under scored women or inflated home values in specific zip codes resulting in unfair lending and credit access disparities.

These domains highlight how AI can both mirror and amplify social segregation without thoughtful interventions.

Research by Brookings AI Equity Lab provides extensive analysis on AI’s societal equity challenges and opportunities.

How AI Detects and Reduces Bias

1. Bias Detection Tools

Modern AI bias detection uses multiple methodological approaches to identify patterns of discrimination:

- Statistical metrics such as disparate impact ratios, false positive/negative rates and demographic parity identify imbalances in model predictions across protected groups.

- Open-source toolkits such as Aequitas (University of Chicago), IBM AI Fairness 360 (AIF360), Microsoft Fairlearn and others offer functionalities to measure and audit fairness in classification and regression models.

- Visualization and reporting helps stakeholders interpret bias metrics and understand which groups are disproportionately affected.

For example the University of Chicago’s Aequitas toolkit supports generating audit reports that detail disparities across demographics like race, gender and age and recommend mitigation steps.

Amazon faced a widely publicized example when its AI recruiting tool was scrapped for penalizing resumes referencing women’s colleges or groups revealing how bias in historical hiring data translates into discriminatory algorithms.

Similarly Twitter’s image-cropping algorithm was found to favor white faces over Black faces in thumbnail selections spawning Twitter to disable the automated crop to avoid visual segregation online.

For a practical guide with examples Crescendo.ai’s AI Bias Examples & Mitigation Guide is a helpful resource.

2. Ethical AI Frameworks

Institutions are increasingly adopting ethics frameworks that emphasize fairness, accountability and transparency. Key policies include:

- Formation of ethics review boards and Chief AI Ethics Officer roles to oversee AI deployments.

- Compliance with emerging legislation like the EU AI Act which mandates bias monitoring and grants allowances for sensitive data processing if aimed at reducing discrimination, reconciling with GDPR constraints.

- Public disclosures of model capabilities and limitations through AI documentation and model cards.

Initiatives like the EU’s AI Act provide a regulatory backbone for ethical AI governance.

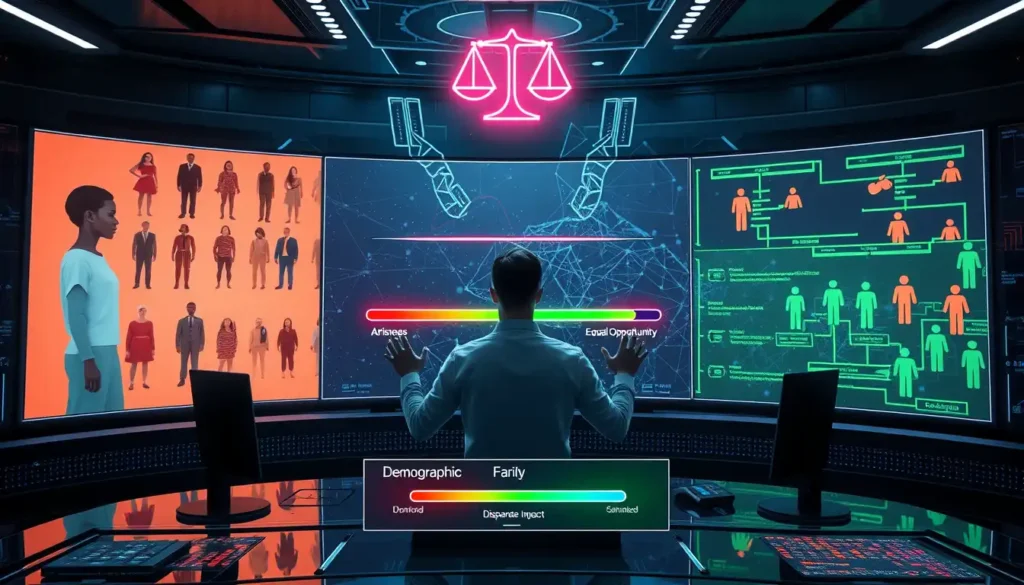

3. Algorithmic Fairness Models

Fairness-aware machine learning techniques proactively adjust models to minimize discriminatory outcomes:

- Approaches like equalized odds and demographic parity aim to equalize error rates and decision distributions among groups.

- Mitigation algorithms reweight training data or adjust decision thresholds to balance false positives and negatives.

- Some firms employ third-party independent audits to validate fairness claims such as pymetrics’ compliance with the EEOC’s four-fifths rule on hiring assessments.

NIST’s Fairness Glossary covers key definitions and techniques.

4. Inclusive Technology Design

Inclusive design embeds diversity from the ground up including:

- Use of multilingual corpora and diverse datasets representing all demographics.

- Participatory design with communities subject to AI decision-making.

- Hardware and software accessibility considerations.

For instance, education platforms collaborating with low-income schools supply devices and adapt AI tutors to work with low-bandwidth connections ensuring equitable educational support.

The World Economic Forum’s AI Toolkit for Schools provides guidelines for equitable AI education technology.

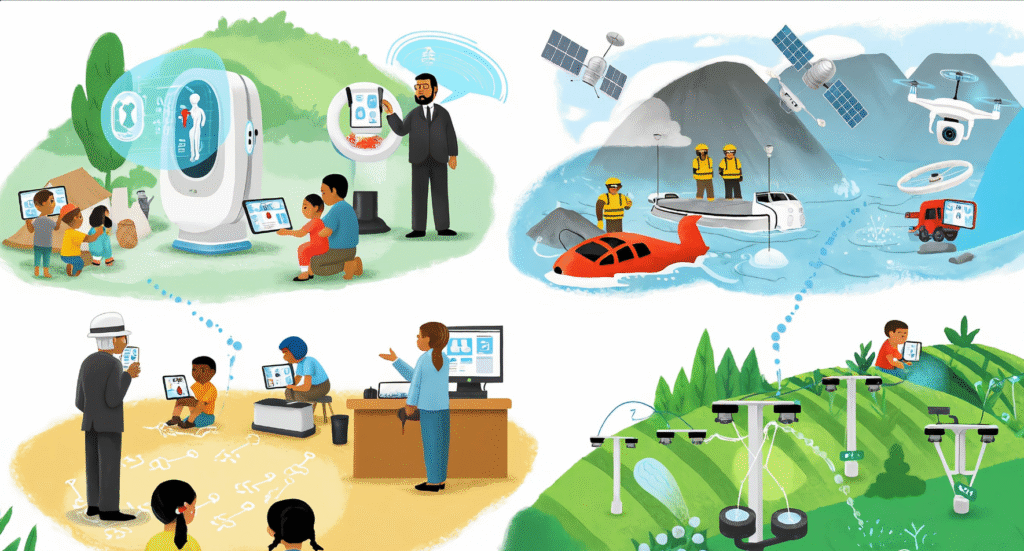

Real World Applications of AI for Social Good

A. AI in Policing and Criminal Justice

Tools like COMPAS widely used in U.S. courts for recidivism risk assessment faced severe criticism after studies revealed racial bias. Black defendants were more often labeled high-risk despite similar re-offense rates compared to white defendants, leading to potential over-sentencing.

In response some jurisdictions replaced or supplemented these opaque tools with transparent models excluding race proxies and publishing validation reports. For example a midwestern county cut racial false-positive disparities by 39% using a logistic model retrained quarterly, balancing fairness with accuracy.

Ethical auditing and transparency are key to regaining public trust in AI-assisted justice processes.

B. AI in Education

AI-driven early-warning systems use attendance, grades and socio-emotional data to identify students at risk of dropping out. In pilot programs, personalized interventions guided by these predictions lowered dropout rates among first-generation college students by 12%.

AI also helps recommend customized learning paths to underrepresented groups and suggest remedial support advancing educational equity.

C. AI in Hiring

With laws such as New York City’s Local Law 144 employers must conduct yearly bias audits and disclose automated hiring system metrics publicly. This has pushed firms to adopt fairness-tuned resume screeners that anonymize identifying information and equalize interview probabilities across demographic groups.

Compliance fosters accountability and improves employment equity.

For NYC bias audit resources, visit the Official NYC LL 144 Portal.

D. Healthcare Algorithms

One landmark case is the Optum healthcare algorithm initially prioritizing care for healthier white patients based on historical spending rather than clinical risk. A redesign focused on clinical outcomes nearly tripled the number of Black patients flagged for proactive care, reducing disparities.

This demonstrates AI’s power to identify gaps in healthcare equity and rectify them through model reframing.

See the seminal Science study on racial bias in health algorithms for detailed analysis.

Healthcare AI bias examples also include medical imaging systems that underperform for minority groups emphasizing the need for inclusive training data and validation.

For a comprehensive survey on healthcare AI bias see Paubox’s Real-world Examples of Healthcare AI Bias.

Challenges and Criticisms

Despite these advances, AI on segregation faces hurdles:

| Challenge | Explanation | Example or Impact |

|---|---|---|

| Biased Training Data | Historical inequities encoded in datasets | Apple Card’s credit limit algorithm under-scoring women |

| Model Opacity | Black-box models resist transparency and audits | Automated home valuation overstated prices in gentrifying areas |

| Regulatory Fragmentation | Conflicting data privacy vs audit requirements | GDPR vs EU AI Act’s allowances cause legal uncertainty |

| Cultural Transferability | Models trained in one region fail in different contexts | Facial recognition less accurate on darker skin tones |

AI systems can unintentionally replicate societal biases if developers don’t actively guard against them which may worsen segregation in digital domains.

Reports like Research AIMultiple’s Bias in AI explore these issues further.

Best Practices for Ethical AI Development

- Diverse and Representative Data Collection: Use stratified sampling, synthetic minority oversampling (SMOTE) and active acquisition of underrepresented data to ensure models learn from equitable datasets.

- Transparency and Documentation: Publish detailed model cards specifying purpose, data sources, metrics and known limitations, fostering trust and enabling audits.

- Bias Mitigation Techniques: Employ pre-, in and post-processing approaches such as re-weighting, adversarial debiasing and threshold calibration to minimize discriminatory outcomes.

- Privacy Protection: Integrate techniques such as differential privacy and federated learning to comply with regulations (e.g. GDPR) while allowing essential fairness analysis.

- Accountability Mechanisms: Establish human-in-the-loop reviews, redress processes and clear accountability for decisions in sensitive domains like credit, hiring and healthcare.

- Robustness and Security: Stress-test models against adversarial attacks that may disproportionately target vulnerable groups.

- Human-AI Collaboration: Design AI to augment rather than replace expert human judgment; for example providing physicians with decision support rather than automated diagnoses.

- Continuous Monitoring and Re-Training: Perform periodic bias audits, monitor data shifts and re-train models as necessary to respond to evolving social contexts.

- Ethics Review Boards: Maintain independent committees empowered to veto AI deployments that fail fairness and ethical criteria.

- Community and Public Engagement: Conduct forums to explain AI use in accessible language, gather stakeholder feedback and incorporate diverse perspectives.

- These tenets are crucial to ensuring AI contributes positively toward desegregation rather than reinforcing inequality.

References and Further Reading

- Stanford Human-Centered AI Algorithmic Bias Primer: https://hai.stanford.edu/research/bias

- Brookings AI Equity Lab Insight: https://www.brookings.edu/events/education-and-ai-achieving-equity

- University of Chicago Aequitas Tool: https://github.com/dssg/aequitas

- Crescendo.ai AI Bias Examples & Mitigation Guide: https://www.crescendo.ai/blog/ai-bias-examples-mitigation-guide

- EU AI Act Full Text: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:32024R0587

- NIST Fairness Glossary: https://www.nist.gov/itl/ai-fairness-glossary

- World Economic Forum AI Toolkit for Schools: https://www.weforum.org/agenda/ai-education-toolkit

- NYC Local Law 144 Portal: https://www.nycbiasaudit.com

- Science Study on Racial Bias in Health Algorithms: https://www.science.org/doi/10.1126/science.aax2342

- Paubox Real-World Healthcare AI Bias: https://www.paubox.com/blog/real-world-examples-of-healthcare-ai-bias

This comprehensive exploration highlights how AI can be consciously developed and governed to combat social segregation and foster equitable outcomes across society.

Conclusion

AI on segregation stands at a pivotal juncture. Poorly managed AI systems risk perpetuating historic injustices but ethically designed and transparently governed AI can become precise instruments for social justice. Success stories in criminal justice reform, equitable education, bias-aware hiring and healthcare underline this promise.

Regulatory frameworks such as NYC Local Law 144 and the EU AI Act combined with community-driven oversight and technical innovation in fairness algorithms pave a path toward a more equitable digital future.

As AI becomes more deeply embedded in daily life its ability to recognize, reflect and reduce segregation will be one of the defining challenges and opportunities of our time. Stakeholders across technology, policy and civil society must collaborate to ensure AI serves as a force for inclusion, fairness and human dignity.

FAQ’s

1. What does AI on segregation mean?

It refers to using AI to identify and fix systemic inequalities in various social systems.

2. How does ethical AI help in fighting bias?

Ethical AI ensures systems are fair, transparent, and accountable to all users.

3. Can AI be free from bias completely?

Not entirely, but proper design and audits can significantly reduce harmful bias.

4. What sectors benefit from inclusive AI?

Education, justice, healthcare, hiring, and finance gain from fair, inclusive AI applications.

5. Are there laws governing algorithmic fairness?

Some regions have guidelines, but comprehensive AI regulation is still evolving.