Meta Artificial General Intelligence: The Frontier

Meta Artificial General Intelligence is emerging as the next big leap in AI and computing. Combining Meta’s immersive ecosystem and cutting-edge developments in Artificial General Intelligence (AGI), the concept revolves around developing AI that mimics human cognitive capabilities. Unlike traditional AI systems trained for narrow tasks, AGI under Meta’s vision focuses on flexible reasoning, creativity, and learning across multiple domains. This article provides an in-depth exploration of how Meta is influencing AGI, the technological foundation behind it, potential applications, ethical concerns, and its global impact.

What is Meta Artificial General Intelligence?

Meta Artificial General Intelligence (Meta AGI) refers to the convergence of Meta’s technological infrastructure—formerly Facebook—with the overarching pursuit of AGI. This involves AI that can generalize learning and perform intellectual tasks humans can do. Under Meta’s ecosystem, this includes AI models functioning within virtual environments (such as the metaverse), social platforms, and smart hardware.

Unlike traditional narrow AI used in chatbots or facial recognition systems, AGI operates with broader capabilities:

- Reasoning

- Problem-solving

- Emotional intelligence

- Transfer learning

- Creative thinking

Meta AGI implies building cognitive-level intelligence into the company’s core technologies—from Oculus and Quest devices to AI-driven virtual assistants and metaverse avatars.

Historical Context: Meta’s AI Journey

Meta’s journey into AGI builds upon decades of AI research and its ongoing projects at FAIR (Facebook AI Research). The company began integrating machine learning into its platforms early, powering features such as:

- Newsfeed personalization

- Facial recognition

- Language translation

- Chat moderation

In recent years, Meta has pivoted toward building comprehensive digital worlds—the Metaverse—requiring autonomous, human-like agents to interact naturally. These virtual agents are precursors to AGI within the Meta ecosystem.

Meta’s major steps in AGI research include:

- Development of LLaMA (Large Language Model Meta AI)

- Open-source AI language models for academic use

- Investments in Embodied AI and Neural Interfaces

Meta’s Technological Pillars Supporting AGI

1. LLaMA and Large Language Models (LLMs)

Meta’s LLaMA models are alternatives to OpenAI’s GPT and Google’s Bard. They power natural language understanding, translation, summarization, and complex reasoning. With AGI, LLMs will evolve into generalist models capable of logical deduction, emotion recognition, and creativity.

2. Embodied AI in the Metaverse

In Meta AGI, embodied AI refers to avatars or robots that navigate 3D virtual or real environments using sensory input. These systems must:

- Learn spatial awareness

- Perform tasks

- Interact socially

- Adapt to new situations without retraining

3. Computer Vision and Perception

AGI needs to see and understand its environment. Meta’s AI research in image recognition, object detection, and multimodal perception (combining vision, sound, and touch) helps agents understand context like humans do.

4. AI Hardware: Neural Interfaces & Smart Glasses

Meta is investing in non-invasive brain-computer interfaces (BCIs) and AR/VR devices to integrate AGI with physical and virtual realities. These include:

- EMG (Electromyography) wristbands

- AR glasses with contextual awareness

- Next-gen Oculus VR headsets

Capabilities of Meta Artificial General Intelligence

Meta AGI aspires to replicate or exceed human cognitive functions. Key capabilities include:

- General Reasoning: Solving unfamiliar problems by adapting past experiences

- Self-supervised Learning: Learning without labeled datasets—similar to how children learn

- Commonsense Understanding: Grasping unspoken social norms and intentions

- Language Fluency: Comprehending and generating multilingual, context-rich dialogue

- Cognitive Flexibility: Shifting between topics, tasks, and perspectives seamlessly

- Emotional Intelligence: Understanding and responding to emotional cues

Applications Across Meta’s Ecosystem

1. Virtual Companions in the Metaverse

AGI enables Meta avatars to serve as personal assistants, teachers, entertainers, or customer service reps, adapting to user preferences, emotions, and goals.

2. Smart Content Moderation

Instead of rigid keyword filters, Meta AGI could analyze context and nuance in posts, identifying harmful content while preserving freedom of expression.

3. Personalized Education

AGI-driven tutors could tailor curriculum, detect learning gaps, and modify teaching strategies in Meta’s Horizon platform.

4. Healthcare Assistants

AGI integrated with Meta’s smart wearables could monitor vital signs, suggest interventions, and support remote diagnoses.

5. Creative Tools

Writers, artists, and musicians may collaborate with AGI tools to co-create art, videos, and immersive storytelling experiences.

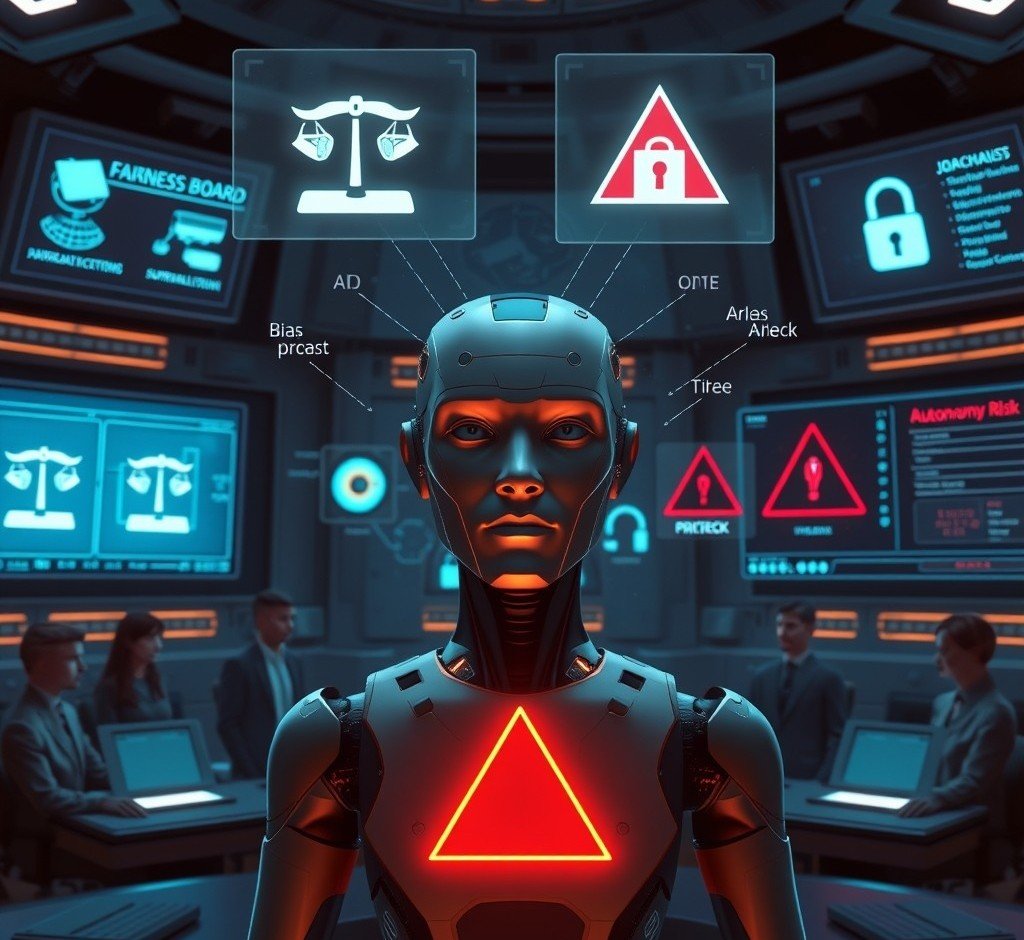

Ethical and Social Implications

Meta Artificial General Intelligence introduces profound ethical questions:

- Bias and Fairness: Training data may contain societal biases, leading AGI to make discriminatory decisions.

- Surveillance: AGI’s ability to monitor and analyze behavior raises concerns about privacy.

- Autonomy: Should AGI act independently in social or business settings?

- Transparency: How can users understand and trust AGI decisions?

- Safety: Safeguards are needed to prevent malicious use or unintended harm.

Meta has initiated AI ethics boards and partnerships to guide AGI development responsibly, but watchdog groups insist on more transparency and accountability.

AGI and Human Collaboration

Meta envisions AGI not as a human replacement, but as a co-pilot. For example:

- Writers could brainstorm with AGI avatars.

- Engineers might simulate designs with AGI before prototyping.

- Doctors could use AGI to analyze patient histories and predict outcomes.

Human-centered AGI will amplify productivity while keeping humans in the decision loop.

Future Prospects of Meta Artificial General Intelligence

Short-Term (2–3 Years):

- More realistic avatars with adaptive personalities

- Meta LLaMA 3 or 4 with AGI-level reasoning

- Wearable devices integrated with contextual AI

Medium-Term (5–7 Years):

- Fully autonomous learning agents in the Metaverse

- Global rollout of AGI-powered tutors and assistants

- Emotion-aware virtual communication

Long-Term (10+ Years):

- Sentient-level artificial agents

- Neural interfaces enabling thought-driven computing

- AGI governance frameworks for safety and control

Conclusion

Meta Artificial General Intelligence represents a bold frontier in technology, aiming to replicate human thought and emotion within immersive digital experiences. As Meta integrates AGI with its social, educational, and immersive platforms, the boundary between human and machine continues to blur. This next evolution in AI has transformative potential but also demands thoughtful oversight, ethical safeguards, and inclusive innovation. Whether empowering creators, educators, or everyday users, Meta AGI is reshaping how we interact with intelligence itself.

FAQ’s

1. What is Meta in artificial intelligence?

Meta in artificial intelligence refers to Meta Platforms Inc.’s (formerly Facebook) initiatives in AI research, tools, and applications, including Meta AI.

2. Is Meta AI really an AI?

Yes, Meta AI is a real artificial intelligence developed by Meta to assist with tasks like language understanding, image processing, and chatbot functionality.

3. What is Meta AI on my phone?

Meta AI on your phone is an embedded assistant feature within Meta apps like Facebook, Instagram, or WhatsApp.

4. Which AI is owned by Meta?

Meta owns several AI systems, including LLaMA (Large Language Model Meta AI), ESM for protein folding, and the Meta AI assistant.

5. Is Meta AI better than ChatGPT?

Meta AI is competitive, but ChatGPT generally offers more advanced conversational capabilities and broader usage across domains.

6. Can I delete Meta AI?

No, you cannot fully delete Meta AI if it’s integrated into Meta apps, but you can limit or disable its use in settings.