AI on Segregation-How Artificial Intelligence Addresses Social Inequality

AI on Segregation is transforming how we identify and address systemic bias across digital systems and institutions. As artificial intelligence becomes more pervasive, it’s crucial to understand how AI can both reflect and remedy societal segregation.

What is AI on Segregation?

AI on segregation refers to the use of artificial intelligence to detect, analyze, and correct segregation patterns in data, systems, and society. These tools can either perpetuate inequality or be trained to fight against it.

Key Areas AI Impacts Social Segregation:

- Education Access

- Criminal Justice Bias

- Employment Algorithms

- Healthcare Equity

- Housing & Credit Decisions

How AI Detects and Reduces Bias

1. Bias Detection Tools

AI systems use training datasets to uncover racial, gender, and socioeconomic bias in decision-making algorithms. By recognizing patterns of unfair treatment, AI on segregation offers data-driven solutions.

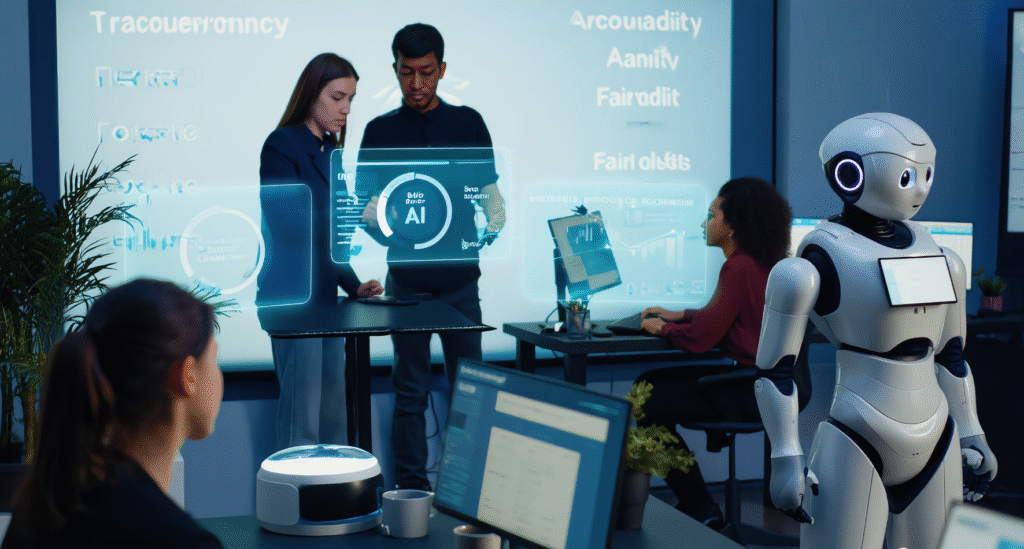

2. Ethical AI Frameworks

Implementing ethical AI means developing systems that ensure fairness, accountability, and transparency. Companies are now forming ethical review boards to monitor model outputs.

3. Algorithmic Fairness Models

These models are designed to mitigate discriminatory results. For example, fairness-aware machine learning adjusts weights in classification algorithms to ensure equal opportunity.

4. Inclusive Technology Design

Developers are embedding diversity into tech stacks to reflect broader populations. This includes using multilingual data and diverse testing sets to reduce exclusion.

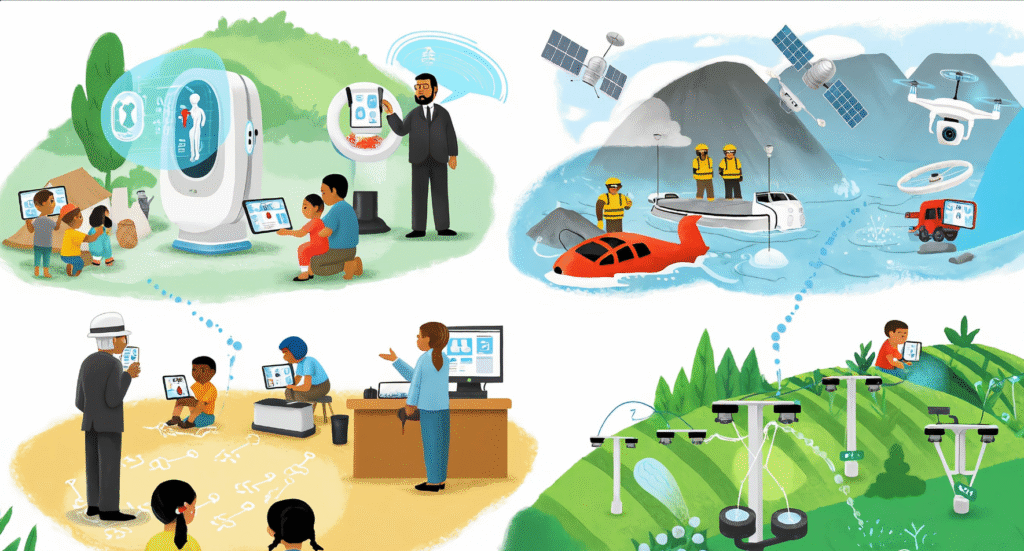

Real-World Applications of AI for Social Good

A. AI in Policing and Criminal Justice

Tools like COMPAS, originally used to assess criminal risk, faced criticism for racial bias. New models now aim to provide fair assessments by removing racial identifiers.

B. AI in Education

Predictive analytics help identify underrepresented student groups and suggest personalized learning paths, increasing access and reducing dropout rates.

C. AI in Hiring

Recruitment platforms now use bias-aware models to anonymize resumes and ensure equal consideration based on merit.

D. Healthcare Algorithms

AI applications analyze patient data to address disparities in diagnosis and treatment, ensuring that underserved populations receive equitable care.

Challenges and Criticisms

Despite the promise, AI on segregation is not without its pitfalls.

- Biased Training Data: If data reflects historical discrimination, AI will reproduce it.

- Lack of Regulation: Without global standards, ethical violations may go unchecked.

- Opacity: Black-box AI models are hard to audit, complicating accountability.

- Cultural Biases: Models trained in one region may not apply fairly in others.

Best Practices for Ethical AI Development

- Diverse and Representative Data: Ensure training data reflects diverse demographics to avoid biases.

- Transparency: Clearly disclose when AI is in use and provide explanations of AI-driven decisions.

- Bias Mitigation: Implement techniques like data preprocessing and algorithmic adjustments to minimize biases.

- Privacy Protection: Safeguard user data and ensure compliance with privacy regulations like GDPR.

- Accountability: Establish mechanisms to trace AI decisions and be accountable for outcomes.

- Robustness and Security: Ensure AI systems are secure against attacks and robust in varied environments.

- Human-AI Collaboration: Design AI to augment human capabilities, not replace human judgment entirely.

- Continuous Monitoring and Evaluation: Regularly assess AI systems post-deployment to detect and mitigate biases and other issues.

- Ethics Review Boards: Establish review boards to oversee AI development and deployment decisions.

- Public Engagement: Involve stakeholders in AI development to address concerns and gather diverse perspectives.

Conclusion

AI on Segregation plays a vital role in rethinking how we use technology to bridge rather than widen inequality gaps. With ethical AI, algorithmic fairness, and inclusive development, artificial intelligence can support more equitable outcomes across sectors. While challenges remain, the commitment to socially responsible AI design will determine how just and inclusive our digital future becomes.

FAQ’s

1. What does AI on segregation mean?

It refers to using AI to identify and fix systemic inequalities in various social systems.

2. How does ethical AI help in fighting bias?

Ethical AI ensures systems are fair, transparent, and accountable to all users.

3. Can AI be free from bias completely?

Not entirely, but proper design and audits can significantly reduce harmful bias.

4. What sectors benefit from inclusive AI?

Education, justice, healthcare, hiring, and finance gain from fair, inclusive AI applications.

5. Are there laws governing algorithmic fairness?

Some regions have guidelines, but comprehensive AI regulation is still evolving.