Programming Language Processing Understanding

Programming Language Processing Understanding is the core mechanism behind how source code is transformed into executable programs. Understanding it is essential for developers, compiler engineers and computer science enthusiasts alike.

What is Programming Language Processing?

Programming language processing refers to the analysis and execution of code written in programming languages by computers. It involves converting human readable code into machine-executable instructions using compilers, interpreters and assemblers.

Programming language processing refers to the analysis and transformation of code written in a programming language by computers. It involves parsing, interpreting, and compiling source code into machine executable instructions. This process ensures that human readable syntax is correctly translated for system execution.

Programming language processors like compilers and interpreters are essential in this workflow. They identify syntax errors, optimize code and manage memory efficiently. Understanding language processing helps in developing better tools and environments for developers.

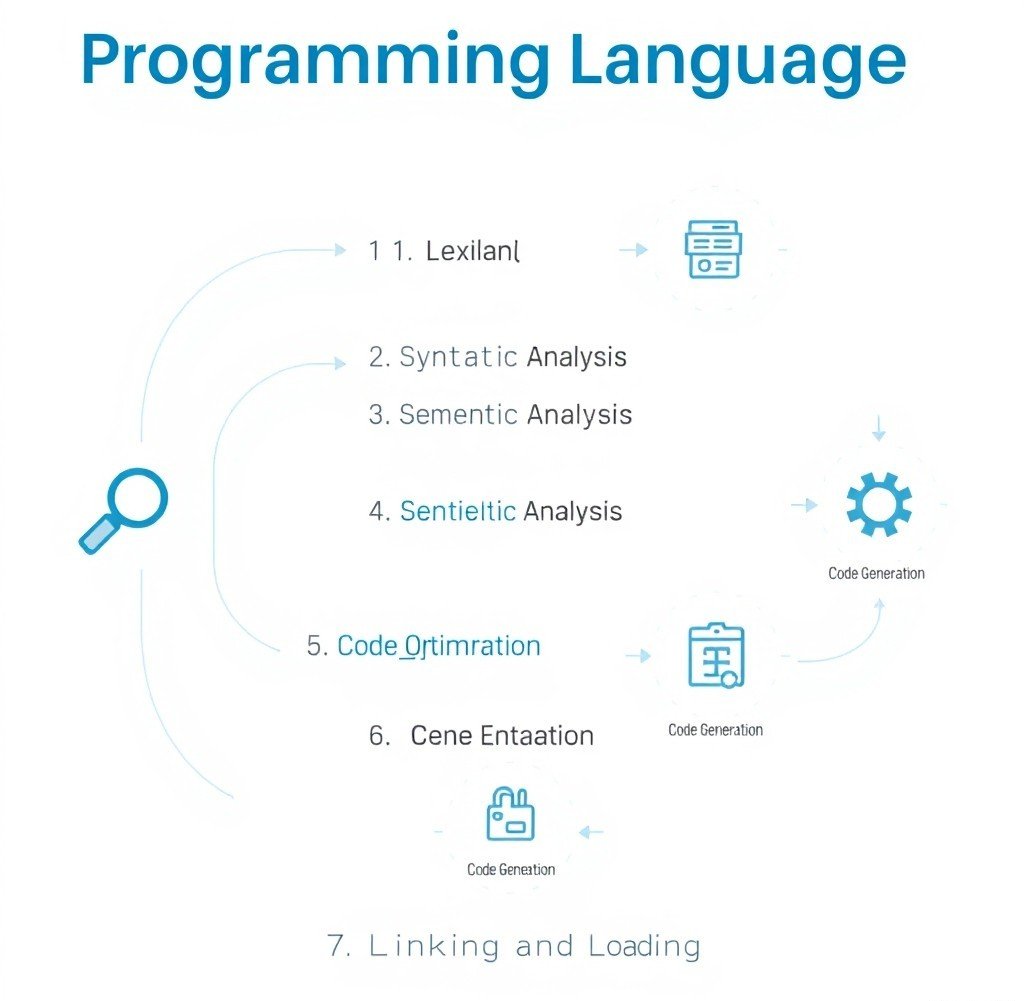

Key Components:

- Lexical Analysis: Breaks code into tokens

- Syntax Analysis: Builds syntax trees from tokens

- Semantic Analysis: Validates meanings and variable types

- Intermediate Code Generation: Transforms validated syntax into pseudo code

- Code Optimization: Improves performance

- Code Generation: Final executable code is produced

Stages of Programming Language Processing

Programming language processing begins with lexical analysis, where the source code is broken into tokens. Then, syntax analysis checks the code structure against grammatical rules. Next, semantic analysis ensures logical consistency and meaning. Finally, the code is translated into machine code during code generation and optimization for execution.

1. Lexical Analysis

Lexical analysis (or scanning) is the first phase of a compiler, responsible for reading raw source code character by character and grouping them into tokens atomic units such as identifiers, keywords, operators, literals and punctuation. A lexer removes whitespace and comments, producing a stream of tokens for the parser.

For example, given the C snippet:

cint max(int a, int b) { return (a > b) ? a : b; }

a lexical analyzer generates tokens like <keyword,int>, <identifier,max>, <operator,(>, <identifier,a>, and so on. This token stream is the foundation for subsequent analysis.

Learn more about lexers and tokenization in Lexical Analysis on Tutorialspoint.

2. Syntax Analysis (Parsing)

Syntax analysis or parsing is the second compiler stage. A parser consumes tokens from the lexer and builds a parse tree or abstract syntax tree (AST) according to the language’s grammar typically defined by a context‐free grammar (CFG). Top‐down (LL) and bottom‐up (LR) parsing techniques are commonly employed.

Consider parsing the expression:

c(a + b) * c

An LR parser constructs an AST where * is the root node with left subtree representing (a + b) and right leaf c. Syntax errors, such as missing semicolons or unmatched parentheses are detected at this stage.

See an overview of parsing techniques in Introduction to Syntax Analysis in Compiler Design on GeeksforGeeks.

3. Semantic Analysis

Semantic analysis verifies that the parse tree adheres not only to syntax rules but also to language semantics, ensuring consistency in types, scoping and declarations. The compiler performs type checking, ensures variables are declared before use and validates function calls.

For example, in C:

cint x = "hello"; // Type mismatch: int assigned from string literal

the semantic analyzer reports a type‐incompatibility error. It also handles name resolution and builds symbol tables associating identifiers with their declarations.

Explore deeper into semantic analysis in Semantic Analysis in Compiler Design on SlideShare.

4. Intermediate Code Generation

After semantic checks, the compiler generates an intermediate representation (IR) that is machine‐independent, facilitating optimization and retargeting. Common IRs include three‐address code (TAC), abstract syntax trees and bytecode.

Example TAC for d = a + b * c;:

textt1 = b * c

t2 = a + t1

d = t2

This IR simplifies transformations and paves the way for platform‐specific code generation.

Read more on IR forms at Intermediate Code Generation in Compiler Design on GeeksforGeeks.

5. Code Optimization

Code optimization improves performance and reduces resource usage without altering program semantics. Optimizations occur at IR or machine‐code level and include constant folding, dead‐code elimination, loop unrolling and strength reduction.

For instance, constant folding transforms:

carea = 2 * 3.1416 * r;

into

carea = 6.2832 * r;

at compile time. Loop unrolling might convert:

cfor (int i = 0; i < 4; ++i) sum += arr[i];

into

csum += arr[0];

sum += arr[1];

sum += arr[2];

sum += arr[3];

See detailed techniques in Code Optimization in Compiler Design on GeeksforGeeks.

6. Final Code Generation

The final phase translates optimized IR into target‐specific machine code or bytecode. It involves instruction selection, register allocation and assembly code emission. The code generator must manage stack frames, calling conventions, and runtime support.

For example, generating x86 assembly for mov eax, [ebp+8] retrieves a function argument; add eax, [ebp+12] adds another before returning the result.

Learn about assembly‐level code generation in Final Code Generation PDF from JNTU.

Understanding and mastering programming language processing empowers developers to design custom languages, enhance compiler toolchains and optimize application performance across platforms. Tools like LLVM and GCC embody these stages offering extensible frameworks for modern compiler engineering.

Tools Involved in Code Parsing

A. Lexers (e.g., Lex, Flex)

Lexers also known as scanners transform raw source code into a stream of tokens units like identifiers, keywords and operators. For instance using Flex you can write a specification that converts C-style comments into COMMENT tokens in one pass. In a real-world scenario the Linux kernel’s build system employs Flex to tokenize architecture-specific assembly directives before parsing. Modern IDEs integrate lexer generators for real-time error detection and syntax highlighting.

B. Parsers (e.g., YACC, Bison)

Parsers consume token streams to construct Abstract Syntax Trees (ASTs) based on grammar rules. GNU Bison (official docs) reads a .y grammar file to generate C code for an LALR(1) parser. A practical example is the SQLite database engine, which uses Bison to parse SQL queries into execution plans. In open-source compilers like GCC Bison-generated parsers validate complex language constructs from loops to template instantiations.

C. Compilers (e.g., GCC, Clang)

Compilers integrate lexing, parsing, optimization, and code generation into a unified workflow. The Clang/LLVM project compiles C/C++ code into LLVM IR, enabling cross-language optimizations and JIT compilation. For example: Apple’s Xcode uses Clang to perform static analysis on Swift-bridged C code catching buffer-overflow risks before build. GCC’s -O2 optimization flag demonstrates common pipeline stages by producing unrolled loops and inlined functions for performance critical applications like the Linux kernel.

D. Interpreters (e.g., Python, Ruby)

Interpreters parse and execute code on the fly, offering dynamic typing and introspection. CPython (documentation) compiles .py files into bytecode (.pyc) and runs them on a stack-based virtual machine enabling interactive REPL sessions and hot-reload in web frameworks like Django. In the Ruby community MRI’s parser gem handles tokenization and AST construction allowing tools like Solargraph to provide live code completion and inline documentation in editors.

Importance in Modern Software Development

Auto-Complete in IDEs

Language server implementations such as Microsoft’s Language Server Protocol power IntelliSense-style auto-completion in VS Code and JetBrains IDEs. These tools parse code using real-time lexers and parsers to suggest method signatures, track variable scopes and offer refactoring recommendations as you type.

Code Formatting

Consistent formatting is enforced by tools like clang-format (GitHub) and Prettier (website), which parse code into ASTs before rewiring line breaks and indents. For example, open-source projects like Facebook’s React use Prettier in CI pipelines to auto-fix style violations on pull requests.

Syntax Highlighting

Frameworks such as Prism.js and CodeMirror parse code tokens to apply CSS classes for highlighting. Real-world applications include static site generators like Hugo, which integrate Prism.js to render colorful, multi-language code blocks in documentation websites.

Linting and Static Analysis

Linters like ESLint (docs) and Python’s Ruff (GitHub) parse ASTs to detect unused variables, enforce style guides, and catch potential bugs. For instance, Airbnb’s JavaScript style guide is codified in an ESLint configuration that dozens of projects adopt to maintain code health.

Cross-Platform Software Support

Compiler frameworks such as LLVM enable a single codebase to target x86, ARM, and WebAssembly by retargeting IR to different back ends. The Electron framework leverages this capability to build desktop apps (e.g., Visual Studio Code) that run identically on Windows, macOS, and Linux without codebase divergence.

Conclusion

Programming language processing from lexing and parsing to compiling and interpreting forms the backbone of modern software tooling. By leveraging open-source engines like Flex, Bison, LLVM and CPython, developers gain powerful features such as live auto-completion, automated formatting and robust static analysis. Real-world examples from the Linux kernel’s build to VS Code’s IntelliSense—demonstrate how these components interlock to deliver reliable, performant, and portable applications across diverse platforms.

FAQ’s

What is a programming language processor?

A language processor is software that translates source code into machine code. Examples include compilers, interpreters, and assemblers.

What is the process of language processing?

Language processing involves analyzing and converting source code into executable code. It includes lexical analysis, parsing, semantic analysis, and code generation.

What is language processing in system programming?

In system programming, language processing refers to designing tools like compilers and linkers. These tools help convert high-level code into system-executable formats.

What is language processing with example?

Language processing includes steps like translating C code into machine language. For example, a compiler processes “int a = 5;” into binary instructions.

What is processing in computer programming?

Processing refers to executing instructions and handling data to perform tasks. It includes input handling, logic execution, and output generation.

What are the 4 types of computer processing?

The four types are batch processing, real-time processing, time-sharing, and multiprocessing. Each serves different system and application needs.